Introducing the TaMPER Framework: A Practical Guide to Using AI in Research Administration

By Michael Overton, Barrie Robison, Luke Sherman, Jason Cahoon, and Sarah Martonick

In a previous post on common sense in the world of AI, we discussed how effective AI use starts with an assessment of the task at hand. As is the case with any tool, carefully aligning the right tool with the task helps us avoid frustrating dead ends when we put these tools to work.

For Research Administration organizations—who navigate high compliance standards while handling lengthy, complex documents—there are four fundamental benchmarks to assess an AI tool as it relates to administrative tasks. These benchmarks include security, accuracy, reproducibility, and flexibility, each of them standing as one of four pillars for reliable AI integration in RA.

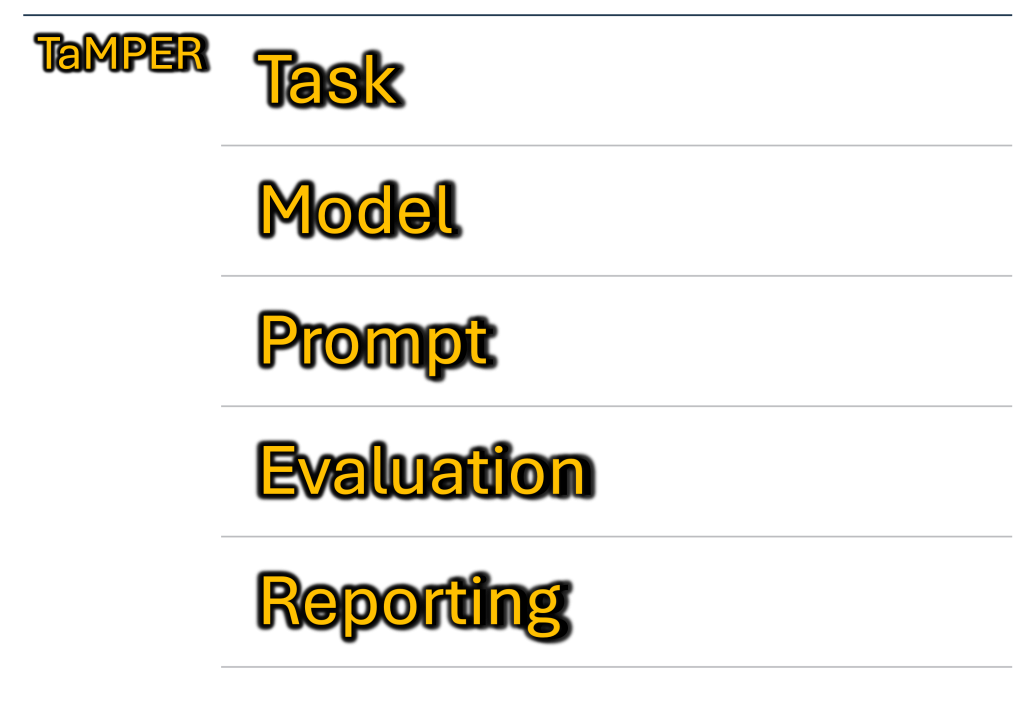

However, without a carefully considered approach, readily available AI tools are unlikely to satisfy the four pillars for RA organizations. Fortunately, there are actions that RAs can take to reliably navigate their high data handling standards with AI tools. In this post, we will discuss these actions through the TaMPER Framework, a model for administrative professionals to securely and effectively leverage the power of AI tools for administrative tasks.

TaMPER is an acronym, which stands for Task, Model, Prompt, Evaluation, and Reporting. Each of these terms marks a critical step that RA professionals must take to execute a secure, reliable, and scalable practice every time they implement an AI tool.

So, in the interest of establishing our bearings with this framework, let’s walk through these steps together…

T is for Task (What specific task is to be done?)

Before you start, it is important that your goal for the task is clearly defined. A well-defined task is foundational for any valuable result, while also informing your assessment of both the AI tool and its outputs. Sometimes, a task will be one step to a multi-step workflow. Some common tasks for Research Administrators include summarization, information extraction, classification, and drafting new documents.

M is for Model (What AI tool will you use?)

“Model” is the technical term for the specific AI engine that you select (such as GPT-4, Claude 3.5, Llama 3). The most important decision in model selection is choosing between a public model and a private, secure one. Public models are more easily accessible, but for most RA tasks, they will fail to meet security compliance standards. By contrast, private models can be hosted on secure, local servers, making them the only safe choice when working with confidential data.

P is for Prompt (How will you instruct the AI tool?)

A “prompt” is the instruction that you give the AI tool. The quality of an AI’s output depends largely on the quality of your prompt. A clear and specific prompt drastically increases the chances that a model will produce a useful output. A great prompt will include…

- Role: Tell the AI exactly who it should be. “Act as a university compliance officer.”

- Context: Provide the necessary background information. “…you’re reviewing a new grant award from the National Science Foundation.”

- Task: Give a clear, specific instruction. “…identify all sub-award requirements and reporting deadlines.”

- Format: Specify how you want the answer structured. “…present the information in a table with two columns: ‘Requirement’ and ‘Deadline’.”

E is for Evaluation (How will you check the AI’s work?)

Evaluating outputs is critical for building trust and moving AI into official RA workflows. AI models can make mistakes, invent facts, or misinterpret instructions. RA professionals are the experts, not AI tools.

R is for Reporting (How will you document your process?)

Reporting AI usage turns a one-time success into a documented, repeatable, and scalable workflow within your organization. With the right tools in place, keeping a clear record ensures that you or a colleague can reproduce tasks later, while also enabling an auditable process that builds institutional trust.

While this post provides an overview of the TaMPER Framework, we encourage you to check out our Guide to Using AI in Research Administration for a deeper dive into each of these steps (the deep dive begins on page 3). We also want to make sure that these steps are accessible and actionable to users, which is why we supplement our deep dive with the TaMPER AI Workflow Design Worksheet. This document is a fillable PDF that guides the user to design an actionable plan for applying the TaMPER Framework to a task. This worksheet helps RA professionals both anticipate and navigate the potential compromises that AI tools can introduce, paving the way for secure and reliable AI use.

And both resources will help you take on the challenge that we pose to our readers…

The best way to gain familiarity with the TaMPER Framework is to put it into practice, and we encourage you to do so. We challenge you to apply the TaMPER Framework to a fun, low-stakes task that you are interested in using AI for. Some examples include writing a movie review, a piece of fan fiction, or a new campfire song. Then, after you gain some comfort with the TaMPER Framework, try to apply it to a medium-stakes task (a professional task that doesn’t contain any sensitive information). Some examples include revising an email (for clarity, organization, and/or tone) or creating a structured to-do list for your workday.

We always value feedback from our community of practice, so we want to know about your experience applying the TaMPER Framework. How do your results compare to your previous results when using AI? Are there aspects of the process that remain challenging? Let us know in the comments below!

This framework is incredibly helpful but also an incredibly important step to ensure AI users are approaching these tools in the right way – understanding how to craft a prompt is only one part of the equation. The importance of considering security, reliability (reproducibility), and accuracy is pivotal in scaling AI use, especially in the highly regulated field of research administration. Thank you to the concept and blog authors for such a thoughtful and well written introduction to this framework. I am excited to put this framework to work.

This framework has been really helpful for me to help better understand prompt engineering. Thanks for sharing!

I’m glad it could be helpful, Katie! Thank you for reading!