Introducing Vandalizer: An AI Tool Built for Research Administration

By Jason Cahoon, Nathan Layman and Dashiell Tyler

Central to the AI4RA project is the development of open-source artificial intelligence (AI) tools that are designed to augment Research Administration (RA) workflows. Technological augmentation is in high demand across the RA field, as Research Administrators (RAs) navigate evolving research and data environments. AI tools show exciting potential to augment RA by streamlining routine administrative tasks, yet commercially available AI tools do not reliably meet RA offices’ benchmarks for accuracy, reproducibility, flexibility and security.

To meet these benchmarks with the power of AI, collaborators working on the AI4RA initiative have been developing Vandalizer, an AI management tool designed specifically for RA workflows. Through Vandalizer, administrators can create and apply AI-powered workflows that improve consistency and reliability across their document and data processing tasks. Vandalizer was developed by a team of developers, data scientists, and other technically inclined personnel at the University of Idaho. The design of Vandalizer was informed by their ongoing collaboration with RA professionals at the University of Idaho and Southern Utah University.

The interdisciplinary approach to designing Vandalizer has positioned its developers to create a practical and reliable tool, one that produces outputs that are accurate, reproducible, and flexible. Vandalizer also has the power to identify and eliminate security risks. Vandalizer is equipped with systems that detect sensitive information, and upon detection, it prompts the user to connect to a secure client. The safety guardrails integrated into Vandalizer empower RAs and RA organizations to leverage the power of AI tools without compromising their security protocols.

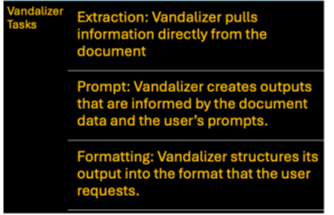

The Vandalizer interface offers workspaces for individual users and organizations, which are centralized hubs where users manage and store data for the AI-powered document handling processes that they create. These AI-powered processes are categorized into three classes of tasks: Extraction tasks (where Vandalizer pulls information directly from a document), prompt tasks (where Vandalizer creates outputs that are informed by the document data and the user’s prompt), and formatting tasks (where Vandalizer structures its outputs into the format that the user requests).

After RAs create and store AI-powered tasks, they can assemble tasks into a workflow, which is a coordinated arrangement of tasks, where the outputs of one or more task(s) flow into the inputs of one or more downstream task(s). For example, one might coordinate a workflow where Vandalizer 1) extracts the deadline dates from a Research Funding Announcement, then 2) formats these deadlines into a table with the deadlines in chronological order (the outputs of an extraction task inputting a downstream formatting task).

Workflows and tasks, after being evaluated for accuracy and reliability, can be easily reapplied to similar documents. This ensures that document and data processing tasks will be repeatable, streamlined, and consistent.

Vandalizer is not designed to replace research administrators, whose specialized expertise in interpreting policies, exercising judgment, and ensuring compliance cannot be replicated. In fact, one of the key strengths of Vandalizer is that it allows for RAs to leverage their institutional knowledge to create flexible workflows targeted at addressing common, domain specific issues.

Currently, collaborators on the AI4RA project are continuing to develop and refine Vandalizer as RA professionals at the University of Idaho and Southern Utah University put Vandalizer to the test in real administrative scenarios. As Vandalizer develops, we are fielding ideas from our community of practice as we work to create secure pathways for others to engage with these tools. If you have experience in this domain, we encourage you to leave your information on our contact page. We will be pleased to connect and learn from your perspective.

Vandalizer supports RA by streaming routine, administrative tasks and supporting data-driven decision-making. Vandalizer’s enhanced efficiencies allow RA professionals to direct their efforts to processes that require their in-depth knowledge and professional experience. To learn more about Vandalizer, we encourage you to check out our Vandalizer Wiki page. There you can learn more about tasks, workflows, and how they come together to support real administrative processes. We also encourage you to keep an eye out for a future AI Trail Blazer post, where we will bring Vandalizers capabilities to life by applying them to an RA-specific example.

As we move forward with developing Vandalizer, we seek the support of our community of practice to identify the RA pain points and bottlenecks that AI could address. We want to know which RA tasks you would like to see enhanced with AI. Let us know in the comments below!

Re: ACCURACY: Vandalizer ensures correct data extraction and transformation without hallucinations

I’d love to know the mechanism(s) by which Accuracy is being approached with.

Also, reading through the Wiki, I noticed that this is geared towards uploading documents.

I’d love to know if it’s possible/planned to be able to connect our proposals database and query about our data from the vandalizer interface? (Maybe this is further in the development plan)

Hi Christian,

It’s a good question and one we are constantly trying to improve our thinking on. Right now we have a series of tasks that we call “Verified”. These are the ones we hope to start rolling out to the broader community first.

Sometimes accuracy is actually possible to measure, this is usually in the case of extraction of values, such as budget numbers or other structured data. In this case we create a test set of preferably real data, or if not synthetic data with known truths. Then we actually do a thorough analysis and can say what the actual accuracy is as a percentage.

The other set, larger and more challenging, are things that need to be correct, but can’t directly be measured. The process to verify these involves reviewing a sample set of outputs with experts. We look for both correctness and reproducibility. The sample set needs to both be correct, and not drift if run multiple times and we check both.

Our thinking on this is constantly evolving, and we aim to release a formalized approach to publish “Verified” workflows and get other institutions involved in this part of the equation ASAP.

As for the data integration, it isn’t in the code yet but is absolutely on the future development plan. Lots of use cases are popping up that require it.

Thanks for the questions!

It’s exciting to see Vandalizer being built specifically for research administration! One big challenge I’d love to see AI address is projecting budgets over the full duration of a grant. That’s something no current tool does accurately or efficiently enough, and it would make a real difference in day-to-day grant management.

Following closely! There are so many AI tools to choose from and then so many lenses to view use through, especially regarding data security. For RA, I would like to see a trust-worthy (e.g., not stealing data to sell later, and one that has been well vetted through a thorough equity lens) AI tool. Some of the considerations that I would like to see include: basic document analysis; document data collection (i.e. looking for ALN, contact info, terms and conditions, obligations vs committted funding, contract terms, keywords, reporting dates, etc), workflow analysis (i.e. a tool that follows the flow of a proposal through submission and awarding and provides metrics on the processing, especially regarding time, process elements, contact info, etc); process breakdown; reference tools of up-to-the-minute resources (i.e. executive order comes in and is processed in real time in regard to legal and structural standards); proprietary security – an internal control that limits the release of institutional or personalized data – contains it within a limited local system; and lastly (for today) I would love the AI tool to work directly with whatever grants management system is local – is there a push/pull element between local data sources?. This is an exciting project with an enormous array of possibilities. It will be interesting to see which limitations and boundaries you choose to prioritize. I think this will be a big determinant as to the type of end user.

Many of these things are in the works! You can see a list of new features that are included in the latest release of Vandalizer in the October Newsletter that just went out. Additionally, many things like “document data collection” are completely accessible extraction tasks in the current version of Vandalizer. I’m excited to get you all involved once it goes into limited early access. Stay tuned for big things happening in 2026!